Since I’ve started experimenting with Solaris in my home lab, I’ve really wanted to try managing systems with some sort of configuration management software. I originally thought about trying Rex, a Perl configuration management tool, but I’ve yet to take the time to learn it. I do, however, know Ansible, and it came as a welcome surprise to me that I can install Python, which is needed by Ansible, on Solaris without having to go through the hassle of compiling it from source. This is because Python can be installed using the pkgutil tool from OpenCSW. In addition, the community.general collection in Ansible includes a pkgutil module that allows Yum/Apt-like package management. One day I decided to see if Ansible would work on Solaris.

Note: your results may vary in following these directions; I can’t guarantee that they will work for you.

Prepare Solaris 9 for Ansible

I performed the below steps on SPARC and x86 Solaris 9.

- On Solaris 9 x86, I installed the Solaris 9 x86 Recommended Patch Cluster (9_x86_Recommended.zip), found here: http://ftp.lanet.lv/ftp/unix/sun-info/sun-patches/. I also had to install the libm patch 111728-03 found on the same site.

- To install the patch cluster, SCP 9_x86_Recommended.zip to /tmp on your Solaris 9 system and unzip it. Drop to single user mode with init s. cd to /tmp/9_x86_Recommended and run ./install_cluster. Reboot the system

- To install patch 111728-03, SCP 111728-03.zip to tmp on your Solaris 9 system and unzip it. Install it with patchadd -d /tmp/111728-03

- On Solaris 9 SPARC, running on my SunBlade 100, I installed the Solaris 9 Recommended Patch Cluster (9_Recommended.zip), found here: http://ftp.lanet.lv/ftp/unix/sun-info/sun-patches/.

- Just like for the x86 platform, SCP 9_Recommended.zip to /tmp on your Solaris 9 system and unzip it. Drop to single user mode with init s. cd to /tmp/9_Recommended and run ./install_cluster. This took hours on my SB-100 with a spinning disk. Reboot the system after completion.

While not all of the below are necessary, I decided to write a script that took care of the following:

- Install and configure pkgutil.

- Install sudo with pkgutil (you can use the root account, but sudo is the preferred method).

- Install python with pkgutil (I chose to install the basic Python 2.6, but there are also packages for 2.7 and 3.1. Later on I try both of these).

- Create a local Ansible user and group with a home directory in /export/home.

- Create a sudo entry for the ansible user.

- Add an SSH key to .ssh/authorized_hosts

I ended up writing both a Bash script and a Perl script for this purpose. I prefer and recommend the Bash version, as it is much simpler and cleaner, but I have included the Perl one as well in case you are curious and want to look at some ugly Perl code.

#!/bin/bash

PUBKEY='YOUR_SSH_PUBLIC_KEY'

# Install pkgutil from /tmp/pkgutil.pkg

if [[ ! -f "/opt/csw/bin/pkgutil" ]] ; then

if [[ ! -f "/tmp/pkgutil.pkg" ]] ; then

echo "You must copy pkgutil.pkg to /tmp"

exit 1

else

echo "Installing pkgutil."

pkgadd -d /tmp/pkgutil.pkg

fi

fi

# Setting the mirror in /etc/opt/csw/pkgutil.conf

perl -pi -e 's/#?mirror=\S+/mirror=http:\/\/your_mirror_ip\/solaris\/opencsw\/bratislava/g' /etc/opt/csw/pkgutil.conf

# Check if packages need to be installed.

if [[ ! -f "/opt/csw/bin/python" ]] ; then

echo "Installing python."

/opt/csw/bin/pkgutil -y -i python

fi

if [[ ! -e "/opt/csw/bin/sudo" ]] ; then

echo "Installing sudo."

/opt/csw/bin/pkgutil -y -i sudo

fi

if [[ ! -f "/opt/csw/bin/openssl" ]] ; then

echo "Installing openssl."

/opt/csw/bin/pkgutil -y -i openssl_utils

fi

# Create ansible user if needed

umask 077

if ! grep ansible /etc/passwd > /dev/null ; then

echo "Creating ansible user and group."

groupadd -g 1010 ansible

useradd -u 1010 -g ansible -c "Ansible User" -s /bin/bash -m -d /export/home/ansible ansible

echo "Setting a random password for the ansible user."

PW_HASH=`/opt/csw/bin/openssl rand -hex 4 | /opt/csw/bin/openssl passwd -crypt -stdin`

SHADOW_TIME=`perl -e 'print int(time()/60/60/24);'`

echo "Backing up /etc/shadow to /etc/shadow.backup"

cp /etc/shadow /etc/shadow.backup

grep -v ansible /etc/shadow > /etc/shadow.new

echo "ansible:${PW_HASH}:${SHADOW_TIME}::::::" >> /etc/shadow.new

mv /etc/shadow.new /etc/shadow

fi

# Set up sudoers if needed

if [[ ! -f "/etc/opt/csw/sudoers.d/ansible" ]] ; then

echo "Adding an entry for the ansible user in /etc/opt/csw/sudoers.d/ansible"

echo "ansible ALL=(ALL) NOPASSWD: ALL" > /etc/opt/csw/sudoers.d/ansible

fi

# Create additional directories in the home directory and

# ensure the public key is correct

if [[ ! -d "/export/home/ansible/.ansible/tmp" ]] ; then

mkdir -p /export/home/ansible/.ansible/tmp

fi

if [[ ! -d "/export/home/ansible/.ssh" ]] ; then

mkdir /export/home/ansible/.ssh

fi

echo $PUBKEY > /export/home/ansible/.ssh/authorized_keys

chown -R ansible:ansible /export/home/ansible

echo "ansible user configuration script has completed."

#!/usr/bin/perl -w

use strict;

my @chars = ('.', '/', 'A'..'Z', 'a'..'z', 0..9);

my $csw_mirror = 'your-mirror-url';

my $csw_release = 'bratislava';

my $pubkey = <<'PUBKEY';

YOUR_PUBLIC_SSH_KEY

PUBKEY

# You can set a password here, but I choose to set a random one.

my $ansible_pw = join('', @chars[ map { rand @chars } ( 1 .. 8 )]);

if (-f '/opt/csw/bin/pkgutil') {

print "pkgutil is already installed. Skipping this step.\n";

} else {

print "Installing pkgutil.\n";

unless (-f '/tmp/pkgutil.pkg') {

die "You must copy pkgutil.pkg to /tmp before proceeding.\n";

}

# Create answer file to avoid being prompted to install.

open(ADMIN, '>', '/tmp/adminfile') || die "Unable to open /tmp/adminfile: $!\n";

print ADMIN "action=nocheck\n";

close(ADMIN);

system('yes all | pkgadd -a /tmp/adminfile -d /tmp/pkgutil.pkg');

unless (-f '/opt/csw/bin/pkgutil') {

die "pkgutil did not install successfully. Exiting.\n";

}

}

# Update /etc/opt/csw/pkgutil.conf with the correct mirror

print "Updating /etc/opt/csw/pkgutil.conf with the correct mirror\n";

open(PKGUTIL_IN, '<', '/etc/opt/csw/pkgutil.conf')

||die "Unable to open /etc/opt/csw/pkgutil.conf: $!\n";

open(PKGUTIL_OUT, '>', '/tmp/pkgutil.conf') || die "Unable to open /tmp/pkgutil.conf: $!\n";

while (my $line = <PKGUTIL_IN>) {

if ($line =~ /^#?mirror\=/) {

print PKGUTIL_OUT "mirror=${csw_mirror}/${csw_release}\n";

} else {

print PKGUTIL_OUT $line;

}

}

close(PKGUTIL_IN);

close(PKGUTIL_OUT);

system('mv /tmp/pkgutil.conf /etc/opt/csw/pkgutil.conf');

my @packages;

unless (-f '/opt/csw/bin/sudo') {

push(@packages, 'sudo');

}

unless (-f '/opt/csw/bin/python') {

push(@packages, 'python');

}

if (scalar(@packages) > 0) {

my $install = '/opt/csw/bin/pkgutil -y -i ' . join(' ', @packages);

print 'Installing ' . join(', ', @packages) . "\n";

system($install);

} else {

print "Python and sudo are already installed.\n"

}

print "Checking if the ansible user already exists.\n";

my $user_created = 0;

open(PASSWD, '<', '/etc/passwd') || die "Unable to open /etc/passwd: $!\n";

while (my $line = <PASSWD>) {

if ($line =~/^ansible\:/) {

$user_created = 1;

}

}

close(PASSWD);

if ($user_created == 0) {

print "Creating ansible user and group.\n";

system('groupadd -g 1010 ansible');

system('useradd -u 1010 -g ansible -c "Ansible User" -s /bin/bash -m -d /export/home/ansible ansible');

# Setting the password for the user.

print "Backing up /etc/shadow to /etc/shadow.backup. You should check over /etc/shadow before rebooting.\n";

system('cp /etc/shadow /etc/shadow.backup');

my $shadow_time = int(time()/60/60/24);

my $salt = join('', @chars[ map { rand @chars } ( 1 .. 8 )]);

my $pw_hash = crypt($ansible_pw, $salt);

open(SHADOW_IN, '<', '/etc/shadow') || die "Unable to open /etc/shadow: $!\n";

open(SHADOW_OUT, '>', '/etc/shadow.new') || die "Unable to open /etc/shadow.new: $!\n";

while (my $line = <SHADOW_IN>) {

if ($line =~ /^ansible\:/) {

print SHADOW_OUT "ansible:${pw_hash}:${shadow_time}::::::\n";

} else {

print SHADOW_OUT $line;

}

}

close(SHADOW_IN);

close(SHADOW_OUT);

system('mv /etc/shadow.new /etc/shadow');

} else {

print "User already created.\n";

}

umask 077;

print "Adding sudoers entry if needed.\n";

unless (-f '/etc/opt/csw/sudoers.d/ansible') {

open(SUDOERS_OUT, '>', '/etc/opt/csw/sudoers.d/ansible') ||

die "Unable to open /etc/opt/csw/sudoers.d/ansible: $!\n";

print SUDOERS_OUT "ansible ALL=(ALL) NOPASSWD: ALL\n";

close(SUDOERS_OUT);

}

print "Creating /export/home/ansible/.ansible/tmp if needed.\n";

unless (-d '/export/home/ansible/.ansible/tmp') {

system('mkdir -p /export/home/ansible/.ansible/tmp');

}

print "Creating /export/home/ansible/.ssh if needed.\n";

unless (-d '/export/home/ansible/.ssh') {

system('mkdir -p /export/home/ansible/.ssh');

}

# This will run every time because I am lazy

print "Setting up public key.\n";

open(PUBKEY, '>', '/export/home/ansible/.ssh/authorized_keys') ||

die "Unable to open /export/home/ansible/.ssh/authorized_keys: $!\n";

print PUBKEY $pubkey;

close(PUBKEY);

print "Ensuring that the home directory is owned by the ansible user.\n";

system('chown -R ansible:ansible /export/home/ansible');

I SCP’d the scripts and pkgutil.pkg to /tmp on my Solaris 9 systems, and tested each one successfully.

Configure Ansible on the control node

After configuring the Ansible remote user, I configured an ansible.cfg file in the work directory on the Linux control node.

[defaults] remote_user = ansible deprecation_warnings = false host_key_checking = false interpreter_python = /opt/csw/bin/python inventory = inventory remote_tmp = $HOME/.ansible/tmp private_key_file = ~/.ssh/id_rsa [privilege_escalation] become_exe = /opt/csw/bin/sudo become = true

I will explain a few of the necessary options. interpreter_python of course tells Ansible where to find the Python interpreter on the system. On most Linux systems Ansible is able to discover this in locations such as /usr/bin/python, /usr/bin/python3, etc., but it won’t know to look in /opt/csw/bin. Similarly, Ansible won’t know to look in /opt/csw/bin for the sudo command. Below shows the results of attempting to run the setup module without those options.

remote_tmp = $HOME/.ansible/tmp is a solution to this problem here. Finally, I have only tested this with Ansible 2.10.8 running on Linux Mint 21.1, both out-of-date at this point. Versions of Ansible newer than 2.12 have removed support for Python 2.6.

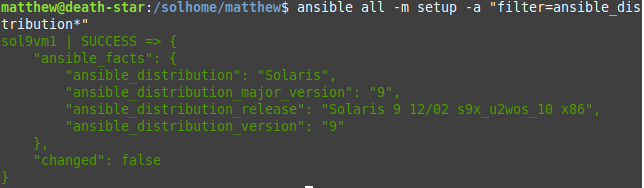

So does it work? Yes, surprisingly. After going through the usual period of troubleshooting, I was finally able to run the setup module against my Solaris 9 VM:

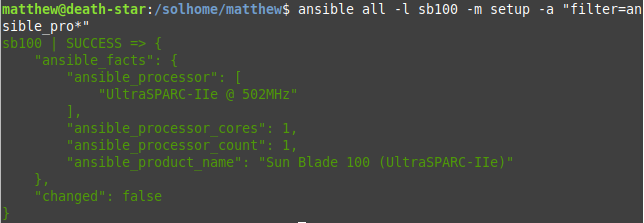

As well as against my SB-100, which is of course runs considerably slower:

Writing a simple playbook to configure NTP

For my first playbook, I decided to write a basic playbook that installs NTP from the OpenCSW repository and configures ntp.conf. While the SPARC version of Solaris 9 has a built-in NTP server, the x86 version I am running does not. For simplicity’s sake, I decided to install the same version on both SPARC and x86. Below is the playbook:

---

- hosts: solaris_9

vars:

ntp_servers:

- '0.pool.ntp.org'

- '1.pool.ntp.org'

tasks:

- name: Install the ntp OpenCSW package

community.general.pkgutil:

name: CSWntp

state: present

- name: Deploy /etc/opt/csw/ntp.conf

ansible.builtin.template:

src: ntp.conf.j2

dest: /etc/opt/csw/ntp.conf

notify: restart ntp

handlers:

- name: restart ntp

ansible.builtin.command: /etc/init.d/cswntp restart

And the Jinja2 Template:

driftfile /var/ntp/ntp.drift

{% for server in ntp_servers %}

pool {{ server }} iburst

{% endfor %}

Below shows it running successfully:

The Ansible lineinfile module works as well:

---

- hosts: solaris_9

tasks:

- name: disable root SSH login

ansible.builtin.lineinfile:

path: /etc/ssh/sshd_config

backup: true

regexp: '^#?PermitRootLogin'

line: 'PermitRootLogin no'

notify: restart sshd

handlers:

- name: restart sshd

ansible.builtin.command: /etc/init.d/sshd restart

These are simple examples that demonstrate that Ansible can be used to configure Solaris 9, which came out in 2002! Next, I will try something more complicated: configuring the LDAP client from my previous post.

Configuring LDAP authentication with Ansible

The LDAP client in Solaris is initialized using the ldapclient command. When creating a playbook for configuring LDAP authentication, I would prefer that this command only run when changes to the configuration are made. My solution ended up being creating a shell script that contains the ldapclient command with all of configuration options and is called as a handler from Ansible whenever changes are made. The Ansible playbook pushes this out to /opt/local/bin (supposedly the preferred location in Solaris for custom scripts).

#!/bin/bash

if [[ -f '/var/ldap/ldap_client_cred' ]] ; then

ACTION='mod'

else

ACTION='manual'

fi

ldapclient $ACTION -a credentialLevel=proxy \

-a authenticationMethod=simple \

-a "proxyPassword={{ bind_pw }}" \

-a "proxyDN={{ bind_dn }}" \

-a defaultSearchBase={{ ldap_base }} \

-a serviceSearchDescriptor=passwd:ou=people,{{ ldap_base }} \

-a serviceSearchDescriptor=group:ou=groups,{{ ldap_base }} \

-a serviceAuthenticationMethod=pam_ldap:simple \

-a "preferredServerList={{ ldap_servers | join(' ') }}"

cp /etc/nsswitch.ldap /etc/nsswitch.conf

Now for the playbook itself. For this post, I wrote a simple one with the variables in the playbook. This is not the preferred method of doing this; it is recommended to create a group_vars/ file with all of the variables. Furthermore, I strongly recommend encrypting your LDAP bind password with Ansible Vault. This will ensure that it doesn’t get accidentally committed to GitHub or some other public place. There are many guides out there on how to do this and it is beyond the scope of this post.

---

- hosts: solaris_9

gather_facts: false

vars:

automount: 'YOUR_NFS_HOME_SHARE'

ldap_servers:

- 'LDAP_SERVER_IP_1'

- 'LDAP_SERVER_IP_2'

ldap_base: 'dc=example,dc=com'

bind_dn: 'uid=authagent,ou=backend,dc=example,dc=com'

bind_pw: 'YOUR_BIND_PW_THAT_SHOULD_GO_IN_ANSIBLE_VAULT'

tasks:

- name: Set DNS in /etc/nsswitch.ldap

ansible.builtin.lineinfile:

path: /etc/nsswitch.ldap

regexp: '^hosts:'

line: 'hosts: dns files'

- name: Create /opt/local/bin

ansible.builtin.file:

path: "{{ item }}"

state: directory

mode: 0755

loop:

- /opt/local

- /opt/local/bin

- name: Create /opt/local/bin/configure_ldap_client.sh

ansible.builtin.template:

src: configure_ldap_client.sh.j2

dest: /opt/local/bin/configure_ldap_client.sh

mode: 0700

notify: Configure LDAP Client

no_log: true

# You may want to set backup to true

- name: Configure /etc/pam.conf

ansible.builtin.copy:

src: pam.conf_sol9

dest: /etc/pam.conf

#backup: true

- name: Configure /etc/auto_home

ansible.builtin.lineinfile:

path: /etc/auto_home

regexp: '^\*'

line: "* -fstype=nfs {{ automount }}/&amp;"

notify: Restart autofs

handlers:

- name: Configure LDAP Client

ansible.builtin.command: '/opt/local/bin/configure_ldap_client.sh'

- name: Restart autofs

ansible.builtin.sysvinit:

name: autofs

state: restarted

The below collapsed text shows the output from a successful execution of this playbook (it was too long to put in a screenshot).

ansible-playbook -D sol_ldap_client.yml

PLAY [solaris_9] ***************************************************************

TASK [Set DNS in /etc/nsswitch.ldap] *******************************************

--- before: /etc/nsswitch.ldap (content)

+++ after: /etc/nsswitch.ldap (content)

@@ -12,7 +12,7 @@

group: files ldap

# consult /etc "files" only if ldap is down.

-hosts: ldap [NOTFOUND=return] files

+hosts: files dns

ipnodes: files

# Uncomment the following line and comment out the above to resolve

# both IPv4 and IPv6 addresses from the ipnodes databases. Note that

changed: [sol9vm1]

--- before: /etc/nsswitch.ldap (content)

+++ after: /etc/nsswitch.ldap (content)

@@ -12,7 +12,7 @@

group: files ldap

# consult /etc "files" only if ldap is down.

-hosts: ldap [NOTFOUND=return] files

+hosts: files dns

ipnodes: files

# Uncomment the following line and comment out the above to resolve

# both IPv4 and IPv6 addresses from the ipnodes databases. Note that

changed: [sb100]

TASK [Create /opt/local/bin] ***************************************************

--- before

+++ after

@@ -1,4 +1,4 @@

{

"path": "/opt/local",

- "state": "absent"

+ "state": "directory"

}

changed: [sol9vm1] =&gt; (item=/opt/local)

--- before

+++ after

@@ -1,4 +1,4 @@

{

"path": "/opt/local/bin",

- "state": "absent"

+ "state": "directory"

}

changed: [sol9vm1] =&gt; (item=/opt/local/bin)

--- before

+++ after

@@ -1,4 +1,4 @@

{

"path": "/opt/local",

- "state": "absent"

+ "state": "directory"

}

changed: [sb100] =&gt; (item=/opt/local)

--- before

+++ after

@@ -1,4 +1,4 @@

{

"path": "/opt/local/bin",

- "state": "absent"

+ "state": "directory"

}

changed: [sb100] =&gt; (item=/opt/local/bin)

TASK [Create /opt/local/bin/configure_ldap_client.sh] **************************

changed: [sol9vm1]

changed: [sb100]

TASK [Configure /etc/pam.conf] *************************************************

--- before: /etc/pam.conf

+++ after: /solhome/matthew/pam.conf_sol9

@@ -19,39 +19,45 @@

#

login auth requisite pam_authtok_get.so.1

login auth required pam_dhkeys.so.1

-login auth required pam_unix_auth.so.1

login auth required pam_dial_auth.so.1

+login auth binding pam_unix_auth.so.1 server_policy

+login auth required pam_ldap.so.1

#

# rlogin service (explicit because of pam_rhost_auth)

#

rlogin auth sufficient pam_rhosts_auth.so.1

rlogin auth requisite pam_authtok_get.so.1

rlogin auth required pam_dhkeys.so.1

-rlogin auth required pam_unix_auth.so.1

+rlogin auth binding pam_unix_auth.so.1 server_policy

+rlogin auth required pam_ldap.so.1

#

# rsh service (explicit because of pam_rhost_auth,

# and pam_unix_auth for meaningful pam_setcred)

#

rsh auth sufficient pam_rhosts_auth.so.1

-rsh auth required pam_unix_auth.so.1

+rsh auth binding pam_unix_auth.so.1 server_policy

+rsh auth required pam_ldap.so.1

#

# PPP service (explicit because of pam_dial_auth)

#

ppp auth requisite pam_authtok_get.so.1

ppp auth required pam_dhkeys.so.1

-ppp auth required pam_unix_auth.so.1

ppp auth required pam_dial_auth.so.1

+ppp auth binding pam_unix_auth.so.1 server_policy

+ppp auth required pam_ldap.so.1

#

# Default definitions for Authentication management

# Used when service name is not explicitly mentioned for authenctication

#

other auth requisite pam_authtok_get.so.1

other auth required pam_dhkeys.so.1

-other auth required pam_unix_auth.so.1

+other auth binding pam_unix_auth.so.1 server_policy

+other auth required pam_ldap.so.1

#

# passwd command (explicit because of a different authentication module)

#

-passwd auth required pam_passwd_auth.so.1

+passwd auth binding pam_passwd_auth.so.1 server_policy

+passwd auth required pam_ldap.so.1

#

# cron service (explicit because of non-usage of pam_roles.so.1)

#

@@ -63,7 +69,8 @@

#

other account requisite pam_roles.so.1

other account required pam_projects.so.1

-other account required pam_unix_account.so.1

+other account binding pam_unix_account.so.1 server_policy

+other account required pam_ldap.so.1

#

# Default definition for Session management

# Used when service name is not explicitly mentioned for session management

@@ -76,7 +83,7 @@

other password required pam_dhkeys.so.1

other password requisite pam_authtok_get.so.1

other password requisite pam_authtok_check.so.1

-other password required pam_authtok_store.so.1

+other password required pam_authtok_store.so.1 server_policy

#

# Support for Kerberos V5 authentication (uncomment to use Kerberos)

#

changed: [sol9vm1]

--- before: /etc/pam.conf

+++ after: /solhome/matthew/pam.conf_sol9

@@ -19,39 +19,45 @@

#

login auth requisite pam_authtok_get.so.1

login auth required pam_dhkeys.so.1

-login auth required pam_unix_auth.so.1

login auth required pam_dial_auth.so.1

+login auth binding pam_unix_auth.so.1 server_policy

+login auth required pam_ldap.so.1

#

# rlogin service (explicit because of pam_rhost_auth)

#

rlogin auth sufficient pam_rhosts_auth.so.1

rlogin auth requisite pam_authtok_get.so.1

rlogin auth required pam_dhkeys.so.1

-rlogin auth required pam_unix_auth.so.1

+rlogin auth binding pam_unix_auth.so.1 server_policy

+rlogin auth required pam_ldap.so.1

#

# rsh service (explicit because of pam_rhost_auth,

# and pam_unix_auth for meaningful pam_setcred)

#

rsh auth sufficient pam_rhosts_auth.so.1

-rsh auth required pam_unix_auth.so.1

+rsh auth binding pam_unix_auth.so.1 server_policy

+rsh auth required pam_ldap.so.1

#

# PPP service (explicit because of pam_dial_auth)

#

ppp auth requisite pam_authtok_get.so.1

ppp auth required pam_dhkeys.so.1

-ppp auth required pam_unix_auth.so.1

ppp auth required pam_dial_auth.so.1

+ppp auth binding pam_unix_auth.so.1 server_policy

+ppp auth required pam_ldap.so.1

#

# Default definitions for Authentication management

# Used when service name is not explicitly mentioned for authenctication

#

other auth requisite pam_authtok_get.so.1

other auth required pam_dhkeys.so.1

-other auth required pam_unix_auth.so.1

+other auth binding pam_unix_auth.so.1 server_policy

+other auth required pam_ldap.so.1

#

# passwd command (explicit because of a different authentication module)

#

-passwd auth required pam_passwd_auth.so.1

+passwd auth binding pam_passwd_auth.so.1 server_policy

+passwd auth required pam_ldap.so.1

#

# cron service (explicit because of non-usage of pam_roles.so.1)

#

@@ -63,7 +69,8 @@

#

other account requisite pam_roles.so.1

other account required pam_projects.so.1

-other account required pam_unix_account.so.1

+other account binding pam_unix_account.so.1 server_policy

+other account required pam_ldap.so.1

#

# Default definition for Session management

# Used when service name is not explicitly mentioned for session management

@@ -76,7 +83,7 @@

other password required pam_dhkeys.so.1

other password requisite pam_authtok_get.so.1

other password requisite pam_authtok_check.so.1

-other password required pam_authtok_store.so.1

+other password required pam_authtok_store.so.1 server_policy

#

# Support for Kerberos V5 authentication (uncomment to use Kerberos)

#

changed: [sb100]

TASK [Configure /etc/auto_home] ************************************************

--- before: /etc/auto_home (content)

+++ after: /etc/auto_home (content)

@@ -1,3 +1,4 @@

# Home directory map for automounter

#

+auto_home

+* -fstype=nfs 192.168.87.75:/solhome/&amp;

changed: [sol9vm1]

--- before: /etc/auto_home (content)

+++ after: /etc/auto_home (content)

@@ -1,3 +1,4 @@

# Home directory map for automounter

#

+auto_home

+* -fstype=nfs 192.168.87.75:/solhome/&amp;

changed: [sb100]

RUNNING HANDLER [Configure LDAP Client] ****************************************

changed: [sol9vm1]

changed: [sb100]

RUNNING HANDLER [Restart autofs] ***********************************************

changed: [sol9vm1]

changed: [sb100]

PLAY RECAP *********************************************************************

sb100 : ok=7 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

sol9vm1 : ok=7 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Using the sysvinit module

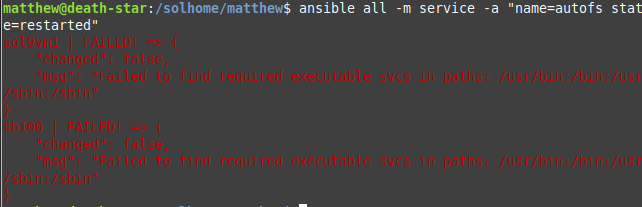

To restart services in the previous examples, I used the command module to call scripts in /etc/init.d, as the Ansible service module would fail.

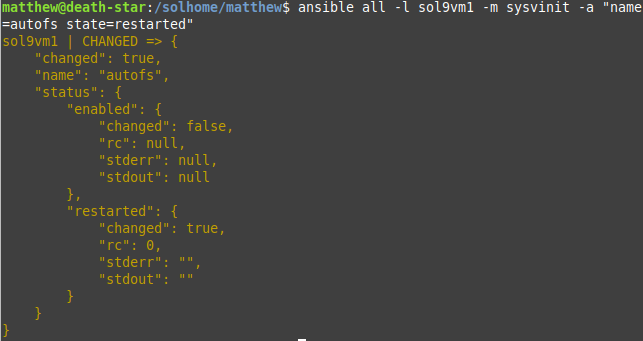

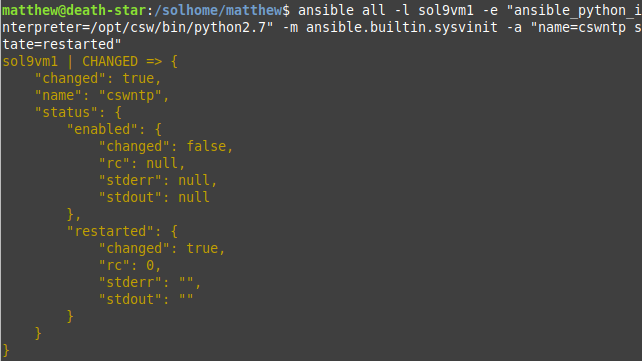

Only later on did I discover that Ansible includes a sysvinit module that can restart sysvinit scripts. I tested this with the autofs service, which does not have a restart command:

The below output shows using the sysinitv module in the NTP playbook and calling it as a handler successfully after adding an additional NTP server:

matthew@death-star:/solhome/matthew$ tail -5 ntp.yml

handlers:

- name: restart ntp

ansible.builtin.sysvinit:

name: cswntp

state: restarted

matthew@death-star:/solhome/matthew$ ansible-playbook -D ntp.yml

[WARNING]: Collection community.general does not support Ansible version 2.10.8

PLAY [solaris_9] ***************************************************************

TASK [Gathering Facts] *********************************************************

ok: [sol9vm1]

ok: [sb100]

TASK [Install the ntp OpenCSW package] *****************************************

ok: [sol9vm1]

ok: [sb100]

TASK [Deploy /etc/opt/csw/ntp.conf] ********************************************

--- before: /etc/opt/csw/ntp.conf

+++ after: /home2/matthew/.ansible/tmp/ansible-local-8441qe7y1m4f/tmph1273un4/ntp.conf.j2

@@ -1,3 +1,4 @@

driftfile /var/ntp/ntp.drift

pool 0.pool.ntp.org iburst

pool 1.pool.ntp.org iburst

+pool 2.pool.ntp.org iburst

changed: [sol9vm1]

--- before: /etc/opt/csw/ntp.conf

+++ after: /home2/matthew/.ansible/tmp/ansible-local-8441qe7y1m4f/tmp41fvp7tl/ntp.conf.j2

@@ -1,3 +1,4 @@

driftfile /var/ntp/ntp.drift

pool 0.pool.ntp.org iburst

pool 1.pool.ntp.org iburst

+pool 2.pool.ntp.org iburst

changed: [sb100]

RUNNING HANDLER [restart ntp] **************************************************

changed: [sol9vm1]

changed: [sb100]

PLAY RECAP *********************************************************************

sb100 : ok=4 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

sol9vm1 : ok=4 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

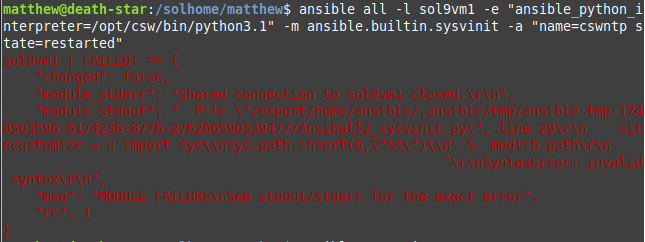

Python 2.7 and 3.1 support

My verdict is, Python 2.7 works, but 3.1 does not. This makes little difference anyway, as you will still need to use an older version of ansible-core, such as 2.10.

Bonus section: does this work on Solaris 8?

The short answer: for the most part, with modifications.

- SSH will need to be configured. A previous post of mine describes how to do this.

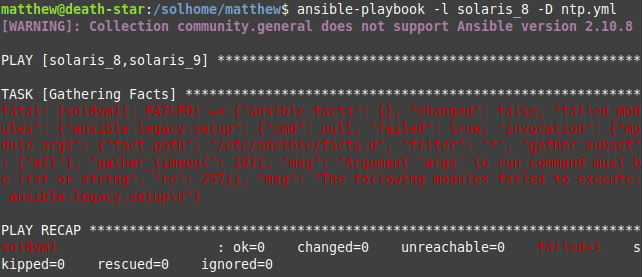

- You will need to disable fact gathering with gather_facts: false in your playbook or gathering = explicit in your ansible.cfg. Otherwise, you will get the below error that is of little help:

-

- You will need to use the dublin release for OpenCSW instead of bratislava.

- You will need to put the sudo entry for the ansible user in /opt/csw/etc/sudoers instead of /etc/opt/csw/sudoers.d/ansible. I prefer putting sudo entries in sudoers.d, but this is not available in the sudo version in Solaris 8. I have included the Ansible prepare shell script with these modifications.

#!/bin/bash

PUBKEY='YOUR_SSH_PUBLIC_KEY'

# Install pkgutil from /tmp/pkgutil.pkg

if [[ ! -f "/opt/csw/bin/pkgutil" ]] ; then

if [[ ! -f "/tmp/pkgutil.pkg" ]] ; then

echo "You must copy pkgutil.pkg to /tmp"

exit 1

else

echo "Installing pkgutil."

pkgadd -d /tmp/pkgutil.pkg

fi

fi

# Setting the mirror in /etc/opt/csw/pkgutil.conf

perl -pi -e 's/#?mirror=\S+/mirror=http:\/\/your_mirror_ip\/solaris\/opencsw\/dublin/g' /etc/opt/csw/pkgutil.conf

# Check if packages need to be installed.

if [[ ! -f "/opt/csw/bin/python" ]] ; then

echo "Installing python."

/opt/csw/bin/pkgutil -y -i python

fi

if [[ ! -e "/opt/csw/bin/sudo" ]] ; then

echo "Installing sudo."

/opt/csw/bin/pkgutil -y -i sudo

fi

if [[ ! -f "/opt/csw/bin/openssl" ]] ; then

echo "Installing openssl."

/opt/csw/bin/pkgutil -y -i openssl_utils

fi

# Create ansible user if needed

umask 077

if ! grep ansible /etc/passwd > /dev/null ; then

echo "Creating ansible user and group."

groupadd -g 1010 ansible

useradd -u 1010 -g ansible -c "Ansible User" -s /bin/bash -m -d /export/home/ansible ansible

echo "Setting a random password for the ansible user."

PW_HASH=`/opt/csw/bin/openssl rand -hex 4 | /opt/csw/bin/openssl passwd -crypt -stdin`

SHADOW_TIME=`perl -e 'print int(time()/60/60/24);'`

echo "Backing up /etc/shadow to /etc/shadow.backup"

cp /etc/shadow /etc/shadow.backup

grep -v ansible /etc/shadow > /etc/shadow.new

echo "ansible:${PW_HASH}:${SHADOW_TIME}::::::" >> /etc/shadow.new

mv /etc/shadow.new /etc/shadow

fi

# Setup sudoers if needed

if ! grep ansible /opt/csw/etc/sudoers > /dev/null ; then

echo "Adding an entry for the ansible user in /opt/csw/etc/sudoers"

echo '# Entry for the ansible user' >> /opt/csw/etc/sudoers

echo 'ansible ALL=(ALL) NOPASSWD: ALL' >> /opt/csw/etc/sudoers

fi

# Create additional directories in the home directory and

# ensure the public key is correct

if [[ ! -d "/export/home/ansible/.ansible/tmp" ]] ; then

mkdir -p /export/home/ansible/.ansible/tmp

fi

if [[ ! -d "/export/home/ansible/.ssh" ]] ; then

mkdir /export/home/ansible/.ssh

fi

echo $PUBKEY > /export/home/ansible/.ssh/authorized_keys

chown -R ansible:ansible /export/home/ansible

echo "ansible user configuration script has completed."

- The sshd_config for Solaris 8 is located at /opt/csw/etc/ssh/sshd_config and the service name is cswopenssh. I have updated the disable_root_ssh.yml to include this:

---

- hosts: solaris_8

gather_facts: false

tasks:

- name: disable root SSH login

ansible.builtin.lineinfile:

path: /opt/csw/etc/ssh/sshd_config

backup: true

regexp: '^#?PermitRootLogin'

line: 'PermitRootLogin no'

notify: restart cswopenssh

handlers:

- name: restart cswopenssh

ansible.builtin.sysvinit:

name: cswopenssh

state: restarted

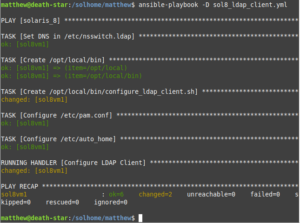

- Finally, for the LDAP client playbook. The pam.conf file and ldapclient command are different for Solaris 8, but everything else is the same. If fact-gathering worked, I could have used the same playbook and told it to use different templates and files based upon the OS release, but that was not possible in this case.

---

- hosts: solaris_8

gather_facts: false

vars:

automount: 'YOUR_NFS_HOME_SHARE'

ldap_servers:

- 'LDAP_SERVER_IP_1'

- 'LDAP_SERVER_IP_2'

ldap_base: 'dc=example,dc=com'

bind_dn: 'uid=authagent,ou=backend,dc=example,dc=com'

bind_pw: 'YOUR_BIND_PW_THAT_SHOULD_GO_IN_ANSIBLE_VAULT'

tasks:

- name: Set DNS in /etc/nsswitch.ldap

ansible.builtin.lineinfile:

path: /etc/nsswitch.ldap

regexp: '^hosts:'

line: 'hosts: files dns'

- name: Create /opt/local/bin

ansible.builtin.file:

path: "{{ item }}"

state: directory

mode: 0755

loop:

- /opt/local

- /opt/local/bin

- name: Create /opt/local/bin/configure_ldap_client.sh

ansible.builtin.template:

src: configure_ldap_client_8.sh.j2

dest: /opt/local/bin/configure_ldap_client.sh

mode: 0700

notify: Configure LDAP Client

no_log: true

# You may want to set backup to true

- name: Configure /etc/pam.conf

ansible.builtin.copy:

src: pam.conf_sol8

dest: /etc/pam.conf

#backup: true

- name: Configure /etc/auto_home

ansible.builtin.lineinfile:

path: /etc/auto_home

regexp: '^\*'

line: "* -fstype=nfs {{ automount }}/&"

notify: Restart autofs

handlers:

- name: Configure LDAP Client

ansible.builtin.command: '/opt/local/bin/configure_ldap_client.sh'

- name: Restart autofs

ansible.builtin.sysvinit:

name: autofs

state: restarted

#!/bin/bash

if [[ -f '/var/ldap/ldap_client_cred' ]] ; then

ACTION='mod'

else

ACTION='manual'

fi

ldapclient $ACTION -a credentialLevel=proxy \

-a authenticationMethod=simple \

-a "proxyPassword={{ bind_pw }}" \

-a "proxyDN={{ bind_dn }}" \

-a defaultSearchBase={{ ldap_base }} \

-a serviceSearchDescriptor=passwd:ou=people,{{ ldap_base }} \

-a serviceSearchDescriptor=group:ou=groups,{{ ldap_base }} \

-a serviceAuthenticationMethod=pam_ldap:simple \

-a "preferredServerList={{ ldap_servers | join(' ') }}"

cp /etc/nsswitch.ldap /etc/nsswitch.conf

Final thoughts

In sum, I was really surprised that Ansible was able to do most tasks on these really old systems. I believe it is a viable solution for managing Solaris, especially if you are already familiar with Ansible. In the future I post any additional findings I have from running Ansible on Solaris 10.

As always, thank you for reading.