Recently I have started to take more time to learn Ansible, building role-based projects similar to what I’ve always done with Puppet, as opposed to simple monolithic playbooks. I still believe Puppet is superior to Ansible when a host has a long list of items to be managed, whereas Ansible excels for more narrowly-scoped tasks such as pushing some files out and restarting a service. However, I’m sure that most would disagree with me, and in my lab I have chosen to use Ansible for most tasks in keeping with the latest trend. For this case, I created a simple Ansible project to set up a CentOS/EL8 Jenkins build node running Docker. The directory structure of this project is below:

├── inventory

├── jenkins_build.yml

├── roles

├── docker

│ └── tasks

│ ├── install.yml

│ ├── main.yml

│ └── service.yml

├── jenkins_node

├── files

│ └── jenkins.pub

└── tasks

├── install.yml

├── main.yml

└── user.yml

First, I created a simple inventory file. The inventory just has one host for now with no variables.

[jenkins_build] jenkins-node2

I then created a simple playbook, jenkins_build.yml, that includes the two roles I need.

---

- hosts: jenkins_build

roles:

- docker

- jenkins_node

Next, I created the roles with mkdir -p roles/docker/tasks and mkdir -p roles/jenkins_node/{tasks,files}. This project is extremely simple; it does not use templates, variables, handlers, etc. and probably could have just used a monolithic playbook for brevity’s sake. However, I decided to use the full directory structure so that the roles could be reused later.

First, I’ll go over the Docker role. All it does is install the docker-ce packages from Docker and ensures that the service is running. The install yml file also needs to install the Docker GPG key:

---

# install.yml

- rpm_key: state=present key=https://download.docker.com/linux/centos/gpg

- yum_repository:

name: docker-ce-stable

description: Docker CE Stable - $basearch

baseurl: https://download.docker.com/linux/centos/$releasever/$basearch/stable

gpgcheck: yes

gpgkey: https://download.docker.com/linux/centos/gpg

- yum: name=docker-ce state=installed

--- # service.yml - service: name=docker state=started enabled=yes

main.yml includes both of the above:

- include: install.yml - include: service.yml

Next, the Jenkins node role: this role installs the required packages and creates the jenkins user. First, you will need a generate a password hash for the Jenkins user. To do so, execute the below Python one-liner:

python -c 'import crypt,getpass;pw=getpass.getpass();print(crypt.crypt(pw) if (pw==getpass.getpass("Confirm: ")) else exit())'Then include the resulting hash in your user.yml file:

---

- user:

name: jenkins

state: present

password: 'hash'

group: users

groups:

- docker

- ansible.posix.authorized_key:

user: jenkins

key: "{{ lookup('file', 'jenkins.pub') }}"

state: present

If you want your Jenkins server to connect to the node using an SSH key, you will need to place the public key in a file located in files. I created this file as jenkins.pub. Then create the .yml files that install the necessary packages and include the tasks.

# install.yml

---

- yum:

name:

- git

- java-1.8.0-openjdk

state: installed

# main.yml --- - include: install.yml - include: user.yml

Finally, run the playbook against the Jenkins build node from a host with Ansible installed. You might want to run it first with the -C option to ensure that it does what you expect it to do:

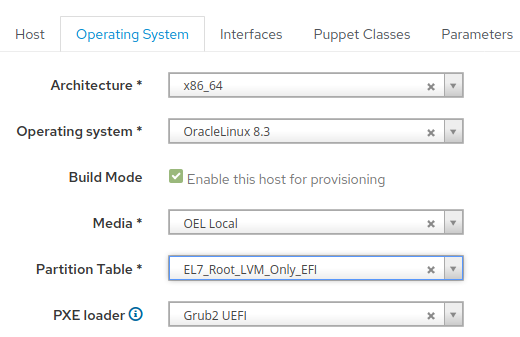

ansible-playbook -Kkb -i inventory -D jenkins_build.ymlIf this was successful, you should then be able to add the node to Jenkins, located at Dashboard > Manage Jenkins > Manage Nodes and Clouds > New Node:

This concludes my blog post. This is a rather simple Ansible task, but demonstrates a use case for it, especially if you are setting up a bunch of build nodes for Jenkins. In my next post I will show how I configured a Jenkins job to build an RPM in a Docker container on the node I added here.