Introduction

In my previous post on the Xen hypervisor, I mentioned my interest in writing a Perl provisioning tool for Xen that functions similarly to Terraform. This tool isn’t meant to replace Terraform (nor claim that it is superior), but there doesn’t seem to be a Terraform provisioner for standalone Xen. Furthermore, sometimes it makes more sense to code a custom tool that does exactly what you want, versus trying to adapt an existing tool, especially a complicated one like Terraform. This tool has a single purpose: to give the user the ability to define a set of virtual machines with their specs (RAM, disk space, static IP addresses, etc.) and spin up these VMs with a single command, as well as take them down when they are no longer needed.

This tool was designed to work with paravirtualized systems only, using the Debian and Ubuntu cloud images. My experience with Enterprise Linux 8 or later systems in Xen has been negative; they can run only in fully-virtualized mode and don’t seem to come back up properly after a system reboot. My recommendation would be to stick with KVM for EL systems. For Debian and Ubuntu, paravirtualized virtual machines are an option, and might provide better performance than full virtualization, at least on older hardware. One drawback to using Xen paravirtualization is that the primary boot disk can only be in the RAW format, which is thick-provisioned; QCOW2 disks will not work with pygrub. This slows down the script considerably, as the 5GB base image has to be copied each time (especially on the slow SSD on my test system). (Note: I later on thought of an idea to copy the disks as QCOW2 images, then convert them to RAW format, but ultimately did not implement it).

Host setup

The host I tested this script on runs Debian 12 and has two NICs. Debian 13 will work as well. Having two NICs isn’t necessary, though it is helpful if you’re doing VLANs like I am. In my case, I set up a separate VLAN for the VMs, with dnsmasq running on the host, providing DNS and DHCP services to the VMs. My router (actually a Linux computer) and my managed switch have been configured to route traffic for this VLAN. Below is how I configured the Xen host for this:

- Installed the bridge-utils and vlan packages: sudo apt install -y bridge-utils vlan

- Configured a bridge interface on the second NIC in /etc/network/interfaces similar to the below code snippet. Note: the bridge will need to have a static IP address for dnsmasq.

- Restart the networking service with: sudo systemctl restart networking

auto enp2s0 iface enp2s0 inet manual auto enp2s0.40 iface enp2s0.40 inet manual auto br40 iface br40 inet static address 192.168.40.2/24 bridge_ports enp2s0.40 bridge_stp off bridge_waitport 0 bridge_fd 0

You don’t have to use dnsmasq with the script. If you want to manage your DHCP service elsewhere, you can exclude dnsmasq in the YAML file for the script—more on this later. If you have only one NIC and just want to do a simple bridge, /etc/network/interfaces can be set to something like below:

# The primary network interface allow-hotplug eno1 iface eno1 inet manual auto br0 iface br0 inet static address 192.168.40.2/24 gateway 192.168.40.1 bridge_ports eno1 bridge_stp off bridge_waitport 0 bridge_fd 0

Or for DHCP:

# The primary network interface allow-hotplug eno1 iface eno1 inet manual auto br0 iface br0 inet dhcp bridge_ports eno1 bridge_stp off bridge_waitport 0 bridge_fd 0

Another idea I thought of would be to set up a NAT network confined to the host, similar to what libvirt does. Perhaps one day I will revisit doing that.

To configure everything else on the host for this exercise:

- Install the necessary packages: sudo apt install ‐‐no-install-recommends dnsmasq genisoimage libtemplate-perl libyaml-libyaml-perl qemu-utils xen-hypervisor xen-hypervisor-common xen-utils.

- Edit /etc/default/grub.d/xen.cfg, un-comment the parameter GRUB_CMDLINE_XEN_DEFAULT, and set it to “dom0_mem=1024M,max:1024M loglvl=all guest_loglvl=all” (following the “best practices” guide here).

- Run sudo update-grub to apply the changes and reboot the system to enable the Xen kernel.

- Un-comment this line in /etc/dnsmasq.conf: conf-dir=/etc/dnsmasq.d/,*.conf

- Create something similar to the below file, /etc/dnsmasq.d/01-xen.conf.

- Restart dnsmasq: sudo systemctl restart dnsmasq

listen-address=::1,127.0.0.1,192.168.40.2 no-resolv no-hosts server=192.168.1.1 domain=ridpath.lab dhcp-range=192.168.40.100,192.168.40.200,12h dhcp-option=3,192.168.40.1 dhcp-option=6,192.168.40.2

Obtaining cloud images

For this exercise, I used the cloud images that can be downloaded from Debian and Ubuntu. As of this writing, both Debian and Ubuntu can run as paravirtualized guests.

- Debian images can be found here. Select the OS release (I only have tested bookworm/12 and trixie/13), “latest”, then the generic-amd64.raw image.

- Ubuntu images can be found here. I’ve only tried the “noble” release. Download the server-cloudimg-amd64.img image. This will be in QCOW2, which isn’t readable by pygrub and will need to be converted to RAW format. This can be done with qemu-img: qemu-img convert -f qcow2 -O raw noble-server-cloudimg-amd64.img noble-server-cloudimg-amd64.raw.

One day I might look at trying NetBSD and FreeBSD in Xen, as both apparently support paravirtualization, but I haven’t tried these yet.

The script

As noted before, the script is written in Perl and uses the Template::Toolkit and YAML::XS modules. The package names for these in Debian are libtemplate-perl and libyaml-libyaml-perl. The script uses seven files:

- A project.yml or project.yaml file. This is the file that contains the YAML parameters for the script. You can call it whatever you want, as long as it has a .yml or .yaml extension. I named mine db.yaml. The project name will be taken from the filename without the extension.

- ansible_inventory.tt. This is a Template-Toolkit template file to parse an Ansible inventory file from the YAML. You won’t need it unless ansible_inventory is set to 1 in the YAML file. The parsed file will be named <project>_inventory.

- reservations.tt. This is a Template-Toolkit template file to parse a dnsmasq configuration file with DHCP reservations and DNS records. The parsed file will be located in /etc/dnsmasq.d/99_<project>.conf. You won’t need it if nodnsmasq is set to 1 in the YAML file.

- xen.tt. Another Template-Toolkit template file to parse a /etc/xen/<instance>.cfg file.

- user-data file for cloud-init.

- ProvVMs.pm. This contains the subroutines for the script. I could have put all of the subroutines in the script itself, but I thought putting these in a subroutine would be better so that they could be reused in other scripts.

- prov_xen_yaml.pl: the script itself.

The code snippets for the three template files are below. They receive variables from ProvVMs.pm:

[% FOREACH host IN ansible_hosts -%] [% host.key %][% IF host.value.ip != '' %] ansible_host=[% host.value.ip %][% END %] [% END -%] [% FOREACH group IN ansible_groups -%] [% "[" %][% group.key %][% "]" %] [% FOREACH member IN group.value.sort -%] [% member %] [% END -%] [% END -%]

# DHCP reservations [% FOREACH resv IN reservations -%] dhcp-host=[% resv.value.mac %],[% resv.value.ip %],[% resv.key %] [% END -%] # DNS records [% FOREACH resv IN reservations -%] address=/[% resv.key %]/[% resv.value.ip %] [% END -%]

name = '[% name %]' type = 'pv' memory = '[% ram %]' vcpus = [% vcpu %] disk = [ '[% disk_img %],raw,xvda,w',[% add_disks %] ] vif = [ 'mac=[% mac %],bridge=[% bridge %]' ] bootloader = 'pygrub' on_reboot = 'restart' on_crash = 'restart'

Next, I will (attempt) to explain the format of the .yaml/yml file. The file will consist of optional single-value global parameters, and an instances hash that defines the virtual machines and machine parameters. First, the list of global parameters you can set:

- ansible_inventory. Set to 1 to parse an Ansible inventory file (<project>_inventory).

- default_bridge: the default network bridge to use. Default is br0. Setting this is recommended.

- default_disk_size (in GB). If not specified, defaults to 10.

- default_image: full path to the default cloud image to use. There is no default set for this and if you choose not to set it, you will need to set the image: <image> parameter for each instance. Therefore, setting this parameter is recommended.

- default_ram (in MB). If not specified, defaults to 1024.

- default_user_data_file: specify the path to an alternative default user-data file for cloud-init. By default it uses the file user-data in the same directory as the script.

- default_vcpu: default number of virtual CPUs. Default is 1.

- disk_path: the default directory where disk images are stored for all instances. Default is /srv/xen.

- nodnsmasq. Default is 0. Set to 1 to disable dnsmasq.

- domain: the default domain for all instances. Default is localdomain. This is only used for dnsmasq and the Ansible inventory.

Below is a code snippet with each parameter set. As noted previously, all of these are optional, but some are recommended.

--- ansible_inventory: 1 default_bridge: br0 default_disk_size: 20 default_image: /srv/xen/debian-13-generic-amd64.raw default_ram: 2048 default_vcpu: 1 disk_path: /srv/xen nodnsmasq: 0 domain: example.net

Now for the instances section of the YAML file. For each instance, the below optional parameters can be set to override the defaults:

- ansible_groups: an array/list of Ansible groups to add the machine to.

- autostart: set to 1 if the instance should be started when the host is booted.

- bridge: the network bridge for the instance.

- disk: a size in GB for the main OS disk.

- disk_path: a path to the directory where the instance’s disk(s) will be stored.

- domain: the instance’s domain.

- image: the path to an OS cloud image to use.

- ip: an IP for use with dnsmasq. If the global parameter dnsmasq is set to 0, this is ignored, except if used by the Ansible inventory.

- mac: a MAC address for the instance. Useful if you’re managing the DNS reservation elsewhere.

- ram: the RAM size in MB.

- skip: set to 1 to skip over an instance. This ensures that it won’t get deleted by mistake.

- user_data_file: the path to an alternative cloud-init user-data file.

- additional_disks: a hash/dictionary for specifying additional disks. Each accepts three parameters: size (in GB, required), format (raw or qcow2), and path (defaults to the same path as the OS disk).

Below is a sample code snippet with all of these parameters set and none of them set:

---

instances:

db1:

autostart: 1

bridge: br10

ip: 192.168.10.10

ram: 4096

disk: 20

disk_path: /nfs/xen

domain: db.example.net

image: /nfs/xen/noble-server-cloudimg-amd64.raw

mac: 00:11:22:33:44:dd

user_data_file: /usr/local/etc/user-data_mysql

additional_disks:

data:

size: 30

format: qcow2

path: /nfs/mysql

ansible_groups:

- db

web1: {}

Finally, here is a code snippet with some parameters set. It is for provisioning a Galera cluster with a ProxySQL load balancer pair:

---

default_bridge: br40

default_image: /srv/xen/debian-13-generic-amd64.raw

domain: ridpath.lab

ansible_inventory: 1

instances:

galera1:

ip: 192.168.40.30

ram: 2048

disk: 20

additional_disks:

data:

size: 30

format: qcow2

ansible_groups:

- db

galera2:

ip: 192.168.40.31

ram: 2048

disk: 20

additional_disks:

data:

size: 30

format: qcow2

ansible_groups:

- db

galera3:

ip: 192.168.40.32

ram: 2048

disk: 20

additional_disks:

data:

size: 30

format: qcow2

ansible_groups:

- db

proxysql1:

ip: 192.168.40.33

ram: 1024

disk: 10

ansible_groups:

- db_proxy

proxysql2:

ip: 192.168.40.34

ram: 1024

disk: 10

ansible_groups:

- db_proxy

The user-data file is similar to the one I used in my post Xen Paravirtualization Part 2 – Debian:

#cloud-config

users:

- name: ansible_user

passwd: $6$7JnhIhkmDNu4rkr8$1NPhvMbqW.dsPmQBgQ6fIbptd2mqw49byvMdjIldnlg.AW44PR7YNa0esI9lXCS2PY8XIIEqdY4.kBmyvQUuJ.

ssh_authorized_keys:

- ssh-ed25519 pubkey1 mattpubkey1

- ssh-ed25519 pubkey1 mattpubkey1

groups: sudo

sudo: ALL=(ALL) ALL

shell: /bin/bash

lock_passwd: false

ssh_pwauth: true

ssh_deletekeys: true

chpasswd:

expire: false

users:

- name: root

password: $6$7JnhIhkmDNu4rkr8$1NPhvMbqW.dsPmQBgQ6fIbptd2mqw49byvMdjIldnlg.AW44PR7YNa0esI9lXCS2PY8XIIEqdY4.kBmyvQUuJ.

Now for the module file, ProvVMs.pm. This contains all of the subroutines, which can potentially be used by other scripts.

use strict;

use warnings;

use Template;

package ProvVMs;

require Exporter;

our @ISA = qw(Exporter);

our @EXPORT = qw(mac_gen validate_ip check_bridge check_image create_ci_iso osinfo_query parse_ansible_inventory parse_reservations_file parse_xen_conf clean_leases_file);

# Generate a random MAC address.

sub mac_gen {

my @m;

my $x = 0;

while ($x < 3) {

$m[$x] = int(rand(256));

$x++;

}

my $mac = sprintf("00:16:3E:%02X:%02X:%02X", @m);

return $mac;

}

# Regex-validate an IP address.

sub validate_ip {

my $ip = $_[0];

unless ($ip =~ /^(\d{1,3}\.){3}\d{1,3}$/) {

die "$ip failed regex IP test. Exiting.\n";

}

}

# Check if bridge interface is valid.

sub check_bridge {

my $bridge = $_[0];

unless ($bridge =~ /^br\d{1,4}$/) {

die "Bridge interface identifier invalid. It must be like br0, etc.\n";

}

unless (-x '/usr/sbin/brctl') {

die "bridge-utils are missing!\n";

}

my $bridge_found = 0;

open(BRCTL, '/usr/sbin/brctl show |') || die "brctl show failed!\n";

while (my $line = <BRCTL>) {

if ($line =~ /^$bridge\s+/) {

$bridge_found = 1;

last;

}

}

close(BRCTL);

if ($bridge_found == 0) {

die "Bridge interface $bridge not present!\n";

}

}

# Run qemu-image info on the base image and check if the size is sufficient.

sub check_image {

my($cloud_image, $disk_size) = @_;

unless (-x '/usr/bin/qemu-img') {

die "qemu-utils are missing!\n";

}

my($cloud_img_size, $img_fmt);

open(QEMU_IMG, "/usr/bin/qemu-img info $cloud_image |") || die "qemu-img info $cloud_image failed!\n";

while (my $line = <QEMU_IMG>) {

if ($line =~ /file format:\s+(qcow2|raw)/) {

$img_fmt = $1;

}

if ($line =~ /virtual size:\s+(\d+)/) {

$cloud_img_size = $1;

}

}

close(QEMU_IMG);

if ($cloud_img_size > $disk_size) {

die "Disk size ${disk_size}GB is smaller than the virtual size of disk image ${cloud_image}. It must be at least ${cloud_img_size}GB.\n";

}

return($img_fmt);

}

# Create the cloud-init ISO

sub create_ci_iso {

my($name, $user_data_file) = @_;

unless (-x '/usr/bin/genisoimage') {

die "genisoimage is missing!\n";

}

mkdir("/tmp/${name}_ci");

system("cp $user_data_file /tmp/${name}_ci/user-data");

open(META_DATA, '>', "/tmp/${name}_ci/meta-data");

print META_DATA "instance-id: ${name}\nlocal-hostname: ${name}\n";

close(META_DATA);

system("/usr/bin/genisoimage -quiet -output /tmp/${name}_ci.iso -V cidata -r -J /tmp/${name}_ci/user-data /tmp/${name}_ci/meta-data") == 0 || die "genisoimage failed!\n";

}

# Validate OS type for virt-install with osinfo-query (not needed for Xen).

sub osinfo_query {

my $os_type = $_[0];

unless (-x '/usr/bin/osinfo-query') {

die "osinfo-query is missing!\n";

}

system("/usr/bin/osinfo-query os short-id=${os_type} > /dev/null") == 0 || die "OS type $os_type is invalid or osinfo-query failed! Please check.\n";

}

# Parse an Ansible inventory file

sub parse_ansible_inventory {

my $file = $_[0];

my $template = $_[1];

die "Template file $template not found!\n" unless (-f $template);

my $vars = {

ansible_hosts => $_[2],

ansible_groups => $_[3]

};

print "Creating Ansible inventory file ${file}\n";

my $tt = Template->new();

$tt->process($template, $vars, $file) || die $tt->error;

}

# Create a dnsmasq reservations file in /etc/dnsmasq.d

sub parse_reservations_file {

my $file = '/etc/dnsmasq.d/' . $_[0];

my $template = $_[1];

die "Template file $template not found!\n" unless (-f $template);

my $vars = {

reservations => $_[2]

};

print "Creating dnsmasq reservations file $file and restarting dnsmasq.\n";

my $tt = Template->new();

$tt->process($template, $vars, $file) || die $tt->error;

system('/usr/bin/systemctl restart dnsmasq') == 0 || die "dnsmasq failed to restart!\n";

}

# Parse /etc/xen/hostname.cfg

sub parse_xen_conf {

my $name = $_[0];

my $xen_vars = {

name => $name,

bridge => $_[1],

ram => $_[2],

vcpu => $_[3],

disk_img => $_[4],

mac => $_[5],

add_disks => $_[6]

};

my $template = $_[7];

my $xen_tt = Template->new();

$xen_tt->process($template, $xen_vars, "/etc/xen/${name}.cfg") || die $xen_tt->error;

}

# Takes a list of list of IP addresses and removes them from the dnsmasq lease file.

sub clean_leases_file {

my @ips = @_;

my @line_split;

open(LEASES_IN, '<', '/var/lib/misc/dnsmasq.leases') || die "Unable to open /var/lib/misc/dnsmasq.leases.\n";

open(LEASES_OUT, '>', '/tmp/dnsmasq.leases') || die "Unable to open /tmp/dnsmasq.leases.\n";

while (my $line = <LEASES_IN>) {

@line_split = split(/\s+/, $line);

if (grep { $_ eq $line_split[2] } @ips) {

next;

} else {

print LEASES_OUT $line;

}

}

close(LEASES_IN);

close(LEASES_OUT);

system('cp /tmp/dnsmasq.leases /var/lib/misc/dnsmasq.leases');

}

Last, the script itself, prov_xen_yaml.pl. The script assumes that all files (ProvVMs.pm, the template files, etc.) are in the current working directory, and that it will be run with sudo ./prov_xen_yaml.pl yaml_file. It accepts the following options:

- -d: delete, instead of provision. It will require confirmation, similar to Terraform.

- -h comma-separated list of hosts: a comma-separated list of hosts to limit operations to. For example, when combined with -d, it will only delete the specified hosts.

The script will not overwrite an instance if it already exists; you must delete it first. This is when the -d and -h host1,host2,… options come in handy. The script won’t delete anything not present in the YAML file, so if you want to keep something from being deleted by mistake (an instance or an additional disk), simply remove it from the YAML file (or set skip: 1 on an instance to skip over that instance).

#!/usr/bin/perl -w

use strict;

use Getopt::Long;

use YAML::XS 'LoadFile';

use lib qw(.);

use ProvVMs;

my($delete, $help, $hosts);

GetOptions ("delete" => \$delete,

"hosts=s" => \$hosts

);

# Script requires specifying a project.yml or project.yaml file to process.

my $yaml_file = $ARGV[0] || die "You must specify a .yml or .yaml file.\n";

my $proj_name;

unless ($yaml_file =~ /^(\S+)\.(yaml|yml)$/) {

die "File must be a .yml or .yaml file.\n";

} else {

$proj_name = $1;

}

die "YAML file $yaml_file not found!\n" unless (-f $yaml_file);

my $yaml = YAML::XS::LoadFile($yaml_file) || die "Unable to load YAML file!\n";

# If -h host1,host2... is specified, limit operations to the listed instances.

my @limit_hosts;

if ($hosts) {

@limit_hosts = split(/,/, $hosts);

}

my $default_disk_path = $yaml->{'disk_path'} || '/srv/xen';

my $nodnsmasq = $yaml->{'nodnsmasq'} || 0;

# The delete section of the script. When -d is specified without -h, everything is deleted.

# When -h host1,host2... is specified, only the listed hosts are deleted.

# After all operations are completed, the script exits.

if ($delete) {

my @hosts_delete;

if (@limit_hosts) {

foreach my $host (@limit_hosts) {

if ($yaml->{'instances'}->{$host}) {

push(@hosts_delete, $host);

} else {

warn "Warning: instance $host not found in $yaml_file.\n";

}

}

} else {

@hosts_delete = sort(keys(%{$yaml->{'instances'}}));

if (($nodnsmasq == 0) && (-f "/etc/dnsmasq.d/99_${proj_name}.conf")) {

unlink("/etc/dnsmasq.d/99_${proj_name}.conf");

system('/usr/bin/systemctl restart dnsmasq') == 0 || die "dnsmasq failed to restart!\n";

}

}

print 'Warning: the following instances will be deleted: ' . join(', ', @hosts_delete) . "\n";

print 'Enter yes to continue: ';

chomp(my $selection = <STDIN>);

exit unless ($selection eq 'yes');

foreach my $host (@hosts_delete) {

# Set skip: 1 to skip over this instance.

if (($yaml->{'instances'}->{$host}->{'skip'}) && ($yaml->{'instances'}->{$host}->{'skip'} == 1)) {

print "Skipping instance $host because skip is set to 1.\n";

next;

}

print "Destroying instance $host.\n";

if (system("/usr/sbin/xl list $host > /dev/null 2>&1") == 0) {

system("/usr/sbin/xl destroy $host") == 0 || die "xl destroy $host failed!\n";

}

unlink("/etc/xen/auto/${host}.cfg") if (-f "/etc/xen/auto/${host}.cfg");

if (-f "/etc/xen/${host}.cfg") {

unlink("/etc/xen/${host}.cfg");

} else {

print "/etc/xen/${host}.cfg not found. Perhaps the instance was already deleted?\n";

}

my $disk_path = $yaml->{'instances'}->{$host}->{'disk_path'} || $default_disk_path;

unlink("${disk_path}/${host}.raw") if (-f "${disk_path}/${host}.raw");

if ($yaml->{'instances'}->{$host}->{'additional_disks'}) {

while (my($disk_name, $disk_params) = each %{$yaml->{'instances'}->{$host}->{'additional_disks'}}) {

my $add_disk_path = $disk_params->{'path'} || $disk_path;

my $format = $disk_params->{'format'} || 'raw';

unlink("${add_disk_path}/${host}_${disk_name}.${format}") if (-f "${add_disk_path}/${host}_${disk_name}.${format}");

}

}

}

print "All requested instances have been deleted (or were already deleted).\n";

exit;

}

# All code below here is used for provisioning/creating instances.

# Create default disk path and /etc/xen/auto if necessary.

unless (-d $default_disk_path) {

system("mkdir -p $default_disk_path");

}

unless (-d '/etc/xen/auto') {

mkdir('/etc/xen/auto');

}

# Read in default bridge from the YAML file and check if it exists with brctl.

my $default_bridge = 'br0';

unless ($yaml->{'default_bridge'}) {

warn "Warning: default_bridge not set in $yaml_file. Using br0.\n";

} else {

$default_bridge = $yaml->{'default_bridge'};

}

&check_bridge($default_bridge);

# Read in default image file from the YAML file.

my $default_image;

unless ($yaml->{'default_image'}) {

warn "Warning: default_image not set in $yaml_file. You must set image: <image> for each instance.\n";

} else {

$default_image = $yaml->{'default_image'};

die "Image $default_image not found!\n" unless (-f $default_image);

}

# Set other defaults.

my $default_disk_size = $yaml->{'default_disk_size'} || 10;

my $default_ram = $yaml->{'default_ram'} || 1024;

my $default_vcpu = $yaml->{'default_vcpu'} || 1;

my $domain = $yaml->{'domain'} || 'localdomain';

my $ansible_inventory = $yaml->{'ansible_inventory'} || 0;

my $default_user_data_file = $yaml->{'default_user_data_file'} || 'user-data';

die "Default user-data file $default_user_data_file not found!\n" unless (-f $default_user_data_file);

# Processing the instances hash in the YAML file.

my($ansible_groups, $instances, $reservations, $ansible_hosts);

my(@static_ips, @checked_bridges);

print "Creating disk images for any new instances.\n";

while (my($inst, $vals) = each %{$yaml->{'instances'}}) {

# Allow setting domain: <domain> on an individual instance.

my $inst_domain = $vals->{'domain'} || $domain;

$ansible_hosts->{"${inst}.${inst_domain}"} = {};

# ip: <ip> is optional, as you may want to configure the DHCP reservation elsewhere.

if ($vals->{'ip'}) {

die "Static IP has already been used for another instance! Please check YAML file.\n" if (grep { $_ eq $vals->{'ip'}} @static_ips);

$reservations->{"${inst}.${inst_domain}"} = {};

$reservations->{"${inst}.${inst_domain}"}->{'ip'} = $vals->{'ip'};

$ansible_hosts->{"${inst}.${inst_domain}"}->{'ip'} = $vals->{'ip'};

push(@static_ips, $vals->{'ip'});

} else {

$ansible_hosts->{"${inst}.${inst_domain}"}->{'ip'} = '';

}

# For the Ansible inventory file. If ansible_groups is specified for the instance,

# add the instance to that group.

if ($vals->{'ansible_groups'}) {

foreach my $group (@{$vals->{'ansible_groups'}}) {

unless ($ansible_groups->{$group}) {

@{$ansible_groups->{$group}} = ();

}

push(@{$ansible_groups->{$group}}, "${inst}.${inst_domain}");

}

}

# Skip instance if its Xen config file exists.

if (-f "/etc/xen/${inst}.cfg") {

if ($reservations->{"${inst}.${inst_domain}"}) {

open(XEN_CFG, '<', "/etc/xen/${inst}.cfg") || die "Unable to open /etc/xen/${inst}.cfg.\n";

while (my $line = <XEN_CFG>) {

if ($line =~ /mac=(\S{17}),/) {

$reservations->{"${inst}.${inst_domain}"}->{'mac'} = $1;

}

}

close(XEN_CFG);

}

next;

}

# Set skip: 1 to skip over this instance.

if (($vals->{'skip'}) && ($vals->{'skip'} == 1)) {

print "Skipping instance $inst because skip is set to 1.\n";

delete($reservations->{"${inst}.${inst_domain}"});

next;

}

# Skip if -h host1,host2 is set and host isn't in that list.

if ((@limit_hosts) && !(grep { $_ eq $inst } @limit_hosts)) {

delete($reservations->{"${inst}.${inst_domain}"});

next;

}

print "Creating instance $inst.\n";

if (!($default_image) && !($vals->{'image'})) {

die "No default image was defined nor was an image set for instance ${inst}. Please check and try again.\n";

}

# Use the default image if one isn't set for the individual instance.

my $image = $default_image;

if ($vals->{'image'}) {

$image = $vals->{'image'};

die "Image $image not found!\n" unless (-f $image);

}

my $disk_size = $vals->{'disk'} || $default_disk_size;

# Make sure that image exists and can be resized to the specified size.

&check_image($image, $disk_size);

# Use the default bridge if one isn't set for the individual instance.

# If one is specified, make sure that it exists.

my $bridge = $default_bridge;

if ($vals->{'bridge'}) {

$bridge = $vals->{'bridge'};

unless (grep { $_ eq $bridge } @checked_bridges) {

push(@checked_bridges, $bridge) if (&check_bridge($bridge));

}

}

my $mac = $vals->{'mac'} || &mac_gen;

if ($reservations->{"${inst}.${inst_domain}"}) {

$reservations->{"${inst}.${inst_domain}"}->{'mac'} = $mac;

}

# Use the default disk path if one isn't set for the individual instance.

# If one is specified, make sure that it exists.

my $disk_path = $default_disk_path;

if ($vals->{'disk_path'}) {

$disk_path = $vals->{'disk_path'};

die "Disk path $disk_path doesn't exist! Please check.\n" unless (-d $disk_path);

}

# Copy the cloud image to file for the instance.

my $disk_image = "${disk_path}/${inst}.raw";

# Change this to use rsync if you want a progress bar. It takes some time.

system("cp $image $disk_image") == 0 || die "cp $image $disk_image failed!\n";

system("/usr/bin/qemu-img resize -q -f raw $disk_image ${disk_size}G") == 0 || die "qemu-img resize -q -f raw ${disk_image} ${disk_size}G failed!\n";

# Processing the additional_disks section for the instance, if specified.

# It follows the format of:

# name:

# size: 30

# format: qcow2|raw

# path: <directory>

# Size is in GB and format must be raw or qcow2.

# Note: this doesn't format the disk inside the instance. You must use cloud-init or do it manually.

my @disk_ids = map { "xvd${_}" } ('b' .. 'z');

my @additional_disks;

if ($vals->{'additional_disks'}) {

while (my($disk_name, $disk_params) = each %{$vals->{'additional_disks'}}) {

die "You must specify a size in GB for disk ${disk_name}, instance ${inst}!\n" unless (($disk_params->{'size'}) && ($disk_params->{'size'} =~ /^\d+$/));

my $size = $disk_params->{'size'};

# Default to raw if format isn't specified.

my $format = $disk_params->{'format'} || 'raw';

die "'format' for must be raw or qcow2 or disk ${disk_name}, instance ${inst}!\n" unless (grep { $_ eq $format } qw(raw qcow2));

my $add_disk_path = $disk_path;

if ($disk_params->{'path'}) {

$add_disk_path = $disk_params->{'path'};

die "Additional disk path $add_disk_path not found! Please check.\n" unless (-d $add_disk_path);

}

my $id = shift(@disk_ids);

system("/usr/bin/qemu-img create -q -f $format ${add_disk_path}/${inst}_${disk_name}.${format} ${size}G") == 0 ||

die "qemu-img create -q -f $format ${add_disk_path}/${inst}_${disk_name}.${format} ${size}G failed!\n";

push(@additional_disks, "'${add_disk_path}/${inst}_${disk_name}.${format},${format},${id},w'");

}

}

# Use the default cloud-init user-data file unless one is specified for the instance.

my $user_data_file = $default_user_data_file;

if ($vals->{'user_data_file'}) {

$user_data_file = $vals->{'user_data_file'};

die "user-data file $user_data_file not found!\n" unless (-f $user_data_file);

}

# Create the cloud-init ISO for the instance and add it to the additional_disks array.

&create_ci_iso($inst, $user_data_file);

my $ci_disk_id = shift(@disk_ids);

push(@additional_disks, "'/tmp/${inst}_ci.iso,,${ci_disk_id},cdrom'");

$instances->{$inst} = {

'bridge' => $bridge,

'ram' => $vals->{'ram'} || $default_ram,

'disk_image' => $disk_image,

'vcpu' => $vals->{'vcpu'} || $default_vcpu,

'mac' => $mac,

'additional_disks' => \@additional_disks

};

}

# Unless nodnsmasq: 1 is set in the YAML file, configure the dnsmasq reservation file.

unless ($nodnsmasq == 1) {

&clean_leases_file(@static_ips);

&parse_reservations_file("99_${proj_name}.conf", 'reservations.tt', $reservations);

}

# If ansible_inventory: 1 is set in the YAML file, process the Ansible inventory template <project>_inventory.

if ($ansible_inventory == 1) {

&parse_ansible_inventory("${proj_name}_inventory", 'ansible_inventory.tt', $ansible_hosts, $ansible_groups);

}

# Start each instance with the cloud-init ISO attached. Then re-parse the Xen config file to remove the cloud-init disk for future start-ups.

while (my($inst, $vals) = each %{$instances}) {

my $additional_disks_list = join(', ', @{$vals->{'additional_disks'}});

# Parse with the cloud-init ISO.

&parse_xen_conf($inst, $vals->{'bridge'}, $vals->{'ram'}, $vals->{'vcpu'}, $vals->{'disk_image'}, $vals->{'mac'}, $additional_disks_list, 'xen.tt');

print "Starting instance ${inst}.\n";

system("/usr/sbin/xl create /etc/xen/${inst}.cfg") == 0 || die "xl create command failed to run for ${inst}! Check output above.\n";

# The cloud-init ISO will be the last disk in the array. This removes it and "re-joins" the additional disk list.

pop(@{$vals->{'additional_disks'}});

if (@{$vals->{'additional_disks'}}) {

$additional_disks_list = join(', ', @{$vals->{'additional_disks'}});

} else {

$additional_disks_list = '';

}

# Parse without the cloud-init ISO.

&parse_xen_conf($inst, $vals->{'bridge'}, $vals->{'ram'}, $vals->{'vcpu'}, $vals->{'disk_image'}, $vals->{'mac'}, $additional_disks_list, 'xen.tt');

if (($vals->{'autostart'}) && ($vals->{'autostart'} == 1)) {

print "Setting VM to be autostarted as requested.\n";

symlink("/etc/xen/${inst}.cfg", "/etc/xen/auto/${inst}.cfg");

}

}

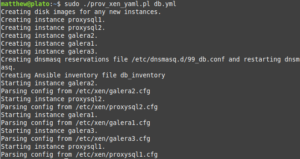

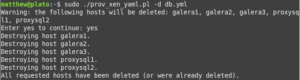

I’m not going to claim that this is the cleanest or most beautiful Perl ever written, but it does what it needs to do. I’m certain that some time in the future I will discover issues with this script and revise it. Below are some screenshots of it in use with the db.yml file:

Conclusion and future for this script

I had a lot of fun writing this script. It made Xen more useful to me in a Terraform-ish way. It was fun digging into Perl and the Template-Toolkit and writing something that could actually be of use to me.

My next task will be to modify this script for use with libvirt and KVM. I’ve already done most of the work on this, but decided that it should be in its own post. I envision this as a sort of “quick-and-dirty” Terraform that can be used to turn up a list of VMs quickly, allowing me to focus on configuring what runs inside the VMs, instead of the tool that creates the VMs. Porting this script to KVM will also make it more useful, as I can then use Enterprise Linux images.

As for Xen, this might be my last post on it for a while. Down the road, I may look at new things to try with it, such as BSD OSs. I still like the fact that it uses simple configuration files and has less bloat than libvirt. However, I’m not sure how useful it is in 2025.

I hope that this post was enjoyable for someone besides myself. As always, thanks for reading!